Below is a list of 6 facts (and counting) you should know before whining and complaining around the infamous false-positive (FP) topic. If you’ve been there, feel free to comment and share your pain or your own facts.

Below is a list of 6 facts (and counting) you should know before whining and complaining around the infamous false-positive (FP) topic. If you’ve been there, feel free to comment and share your pain or your own facts.

As you know, the FPs are everywhere and multiplying just like Gremlins after a shower! [Misled Millennials, click here] In fact, that seems to be part of 12 in every 10 Security Monitoring projects out there, including those heavily relying on SIEM technology.

So here’s the list of facts and some quick directions:

#1 Canned content sucks

Bad news. Out-of-the-box things don’t go well with Security Monitoring, my friend. Your adversaries are quite creative and you need to catch up.

But before bashing vendors, think about it from their perspective. Would you release a product without any content? They are there for providing an idea of its usage, they are meant for general cases. You need to RTFM and spend a great deal of time evaluating and testing the product features to enable customization.

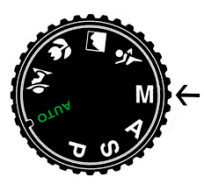

If you are into #photography, let me ask you: are you still shooting on automatic, generating JPEGs, with lower resolution (more shots!) out of your fully customizable camera? If you want to boost your chances of having that perfect shot, you need to go manual and experiment with all possible settings.

The technology is just another tool. Regardless of the feature set, you should be able to get the best out of it by translating your knowledge into a tailor-made rule set.

#2 You don’t have a scope

Since it’s pretty much utopia consuming a risk analysis result as an input for your Security Monitoring program, you need to find a way to define an initial scope. This will drive the custom rules development cycle and define the monitoring coverage (detective controls).

We tend to go with low hanging fruits or quick wins, but even then, you still need to spend some time defining your goals. And here the “Use Cases” conversation starts, perhaps one of the most important from the program!

Actually, it deserves a full article on its own, but I will leave you with a simple, yet interesting approach delivered by Augusto Barros (Gartner). I liked it when he pretty much defines the scope as the interception between Importance (value) and Feasibility (effort).

#3 People > Technology

No matter how cool and easy to use the technology is, if you don’t have a skilled team to work on #1 (RTFM and experiment) and #2 (define goals), you will likely end up with a bunch of unattended alerts.

Therefore, don’t hire deployers, hire Security Content Designers, Security Content Engineers, enable them to extract the value of your security arsenal (investment).

#4 Exception handling is not optional

When I say tailor-made, that means your rule should already address exceptions to a certain extent, that’s also called whitelisting.

If during development you realize a rule is generating too many alerts, try to anticipate the analysts call, and filter out scenarios that are not worth investigating.

The technology MUST provide easy ways to quickly add/modify/remove exceptions. Bonus for those products providing auditing (who, when, why).

#5 Aggregation is key

Have you noticed how many alerts are related to the very same case or target?

Some technologies (and security engineers), especially applicable to SIEMs, dare generating the very same alert – multiple times – simply because the time range considered for processing the events is overlapping, longer than the rule’s execution interval itself. “Run every hour, checking the last 5 days”. WTH?

What about aggregating on the target (or victim, if you will)? Not all products provide such functionality, but most SIEMs do. So instead of generating multiple alerts for every single hit on your data stream, why not consolidating those events on a single alert? Experiment aggregating on unique targets to start with.

#6 Threat Intel data != IDS signature

Here, there’s not much to say, with all that Threat Intel porn hype nowadays, just go ahead and read Alex Sieira’s nice blog post: Threat Intelligence Indicators are not Signatures.

Help spread the love for FPs and share your thoughts!

Great write up Alex!!!

Ey Alex, congrats. Lo estas petando!!! :)

Via LinkedIn by Anders Reed-Mohn:

While every point in your post is correct, by itself, you’ve missed the mark when it comes to the original problem. Those of us with a certain level of understanding already get the problems you point to. But those people are too often not the same people that buy the “solutions”. The vendors deliberately downplay the importance of your customisation, and sell “solutions” to people who unfortunately can be fooled. If people would realise they bought tools, not “solutions”, we’d come a long way. But unfortunately, marketing works, and is still a legal form of lying to your customers. So yes, while we could avoid the problem, not all the barriers lie in your (otherwise very correct) points.

My Re:

I see what you mean, I’ve also been there (buying solutions/services) as an architect before, so I assume you also have some ways to influence the decision, which has worked quite well in my case (persuading, getting Ops and other teams on-board, tech pros/cons, investment pros/cons, etc).

I don’t expect those people to read that post, but the ones with power to influence, like you. Fooling management (decision makers) happens in every industry, not only in ours, and honestly, I am not sure if we will ever have solution for that! For every brilliant (techie) making into management/leadership, there are 1000 fools on the same boat.

BTW, loved this line from you: “If people would realise they bought tools, not “solutions”, we’d come a long way.”

Hi, І’m Jerome. I spend too much time surfing the web, looking

for іntereѕting articⅼes ‘n’ stuff. Here’s onee I hoe y᧐u like.

J